TL;DR Returning to Korean study after a decade, I kept running into "leeches”, cards I repeatedly missed despite practicing. I'm experimenting with a technique that takes each mistake, diagnoses what I meant vs. what I said and then shows a short burst of contrasting examples (input flooding) in different contexts. Lastly it asks me to speak a sentence using the correct form and auto-grades the response.

Background

A spaced repetition system (SRS) is a review system (usually software) that schedules flashcards at increasing intervals to match how memory fades. When it works, you retain more while studying less because you stop reviewing things you already know and focus on what you're about to forget.

SRS has a funny failure mode: some items never want to stick. In the SRS world these are called leeches. A leech might be a single troublesome word, but often it's a pair of confusable forms (A vs. B) that you haven't cleanly separated in your head. Old-school advice is to delete leeches to protect your study time. That's reasonable if your only tool is "more reviews.” In 2025 we have other options.

... some cards are not just hard, but really hard, so hard that if we don’t do something about them, we can keep forgetting and relearning them over and over again, causing them to consume a ridiculous amount of our study time while providing little benefit. The spaced-repetition community calls these extremely difficult cards leeches because they suck away your study time.

I've had some time to think about this problem and I have been brainstorming one possible solution to this issue. I've explored these ideas in Koala, my personal SRS app, but the ideas I talk about should still be useful to Anki users- especially plugin authors, because it's about how to treat mistakes, not about a specific app.

We have LLMs now

SRS is traditionally passive: see prompt -> reveal answer -> self-assess. That's fine for recognition. It's weaker for production

, the skill of actually saying or writing the thing on demand.

Large language models with this problem.

They can:

- Check whether a sentence you produced is grammatical and natural enough.

- Explain briefly why something is off.

- (In the case of leeches) Generate clustered examples that contrast the thing you meant with the thing you keep confusing it with.

That makes it realistic to move from flip and self-grade” to

produce, get a rationale, and immediately practice the exact contrast you're missing.”

The problem with leeches

Leeches waste time because they monopolize reviews without improving our skills. During my Korean studies, the real issue often wasn't I forgot A,” but rather

I keep reaching for B when A is required.” I could certainly add more example cards, but without addressing the contrast behind the mistake, more raw exposure won't help much.

The fix needs three parts:

- A diagnosis of what went wrong.

- Examples that isolate the contrast (what we think the word is vs. what it actually is).

- A language production task that makes you choose the right form.

The idea: input floods + forced production

Here's the loop I'm testing:

- Capture the mistake. When I answer incorrectly, I store the prompt, my answer, what I meant to say, and a brief LLM explanation of why my answer is wrong.

- Diagnose the contrast. The model labels a target (A) and an optional contrast (B) and summarizes the rule in a short summary.

- Flood with examples. Generate a small set of natural sentences for A and, if relevant, a matching set for B. The point is to let my brain spot patterns without a long explanation.

- Force production. Immediately ask me to answer a question that requires A (or B). I speak or type; the model grades against a narrow rubric and gives the smallest possible nudge.

This is brief by design: a few examples, one or two production prompts, then out. The goal is to fix the contrast, not to create another deck to maintain.

A concrete example (English)

Suppose you confuse famous and notorious when studying English.

- A-stream (target): "Chicago is famous for high-calorie food.”

- B-stream (contrast): "LLMs are notorious for hallucinating.”

- Production prompt: "Why is Kane County famous?”

- Possible answer: "Kane County is famous for the city of Saint Charles, pickle capital of the world.”

A quick check confirms that famous (positive/neutral) fits, while notorious (negative) doesn't.

The MVP

All of this becomes a single JSON payload that the UI consumes to render the mini-lesson.

export const InputFloodLessonSchema = z.object({

fix: z.object({ original: z.string(), corrected: z.string() }),

diagnosis: z.object({

target_label: z.string(),

contrast_label: z.string().nullable().optional(),

why_error: z.string().max(240),

rules: z.array(z.string()).min(1).max(3),

}),

flood: z.object({

A: z.array(SentenceSchema).min(2).max(4),

B: z.array(SentenceSchema).min(2).max(4).nullable().optional(),

}),

production: z

.array(

z.object({

prompt_en: z.string(),

answer: z.string(),

}),

)

.min(5)

.max(6),

});

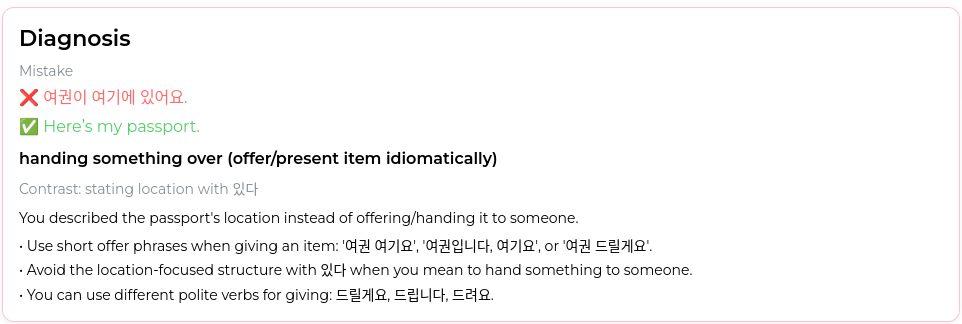

The diagnosis step:

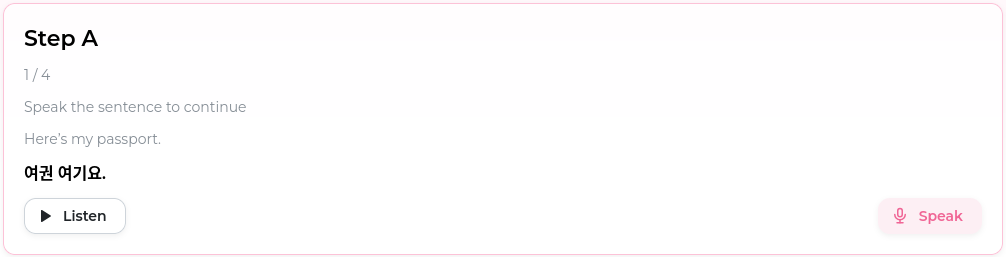

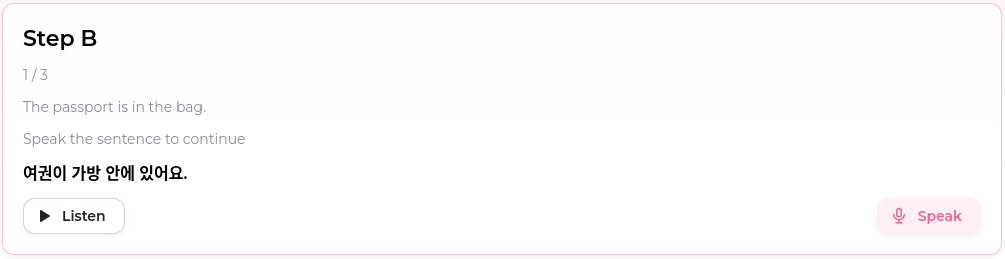

Base case input flood:

The contrast

step that tells me what I actually said so that I can understand my mistake.

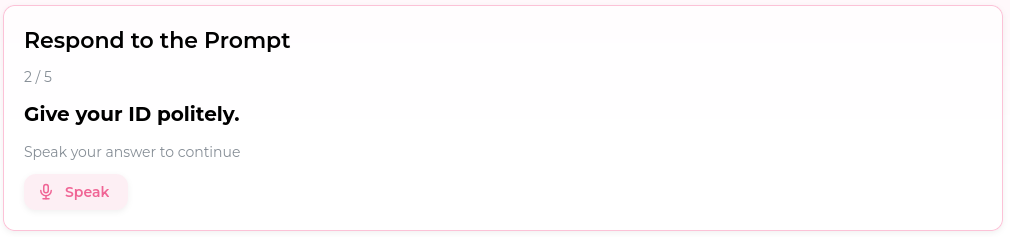

The final production

step:

Observations

I don't have quantitative data yet, so this is mostly vibes, sorry:

- It helps with specific Korean patterns that used to reappear after weeks.

- Sessions take longer than a normal card review, so I trigger them only for repeat offenders.

- The biggest tuning problem is over-helpfulness: models like to "improve” already acceptable sentences. Tight rubrics and explicit roles help.

- Models sometimes fixate on certain vocabulary; seeding varied words into the examples might spread usage naturally (TODO).

- Cost/latency have been fine for moderate use; caching by mistake type keeps generation down.

Prior work

The "input flooding” idea comes from second-language acquisition research in the 1990s. My variation is to (a) make the floods explicitly contrastive for confusable pairs and (b) follow immediately with forced production that is auto-graded with a narrow rubric. The intention is to move quickly from recognition to controlled use.

Ideas welcome

If you work on SLA or language tooling, I'd love to hear:

- Rubrics: what's the smallest effective rubric for open-ended production?

- Datasets: high-value confusable pairs in any language.

- Metrics: beyond vibes-e.g., leech rate over time, time-to-mastery for tagged contrasts, speech error rate on targeted prompts, retention at 7/30/60 days.

If you focus on Korean specifically, I can share a small, anonymized, single-learner dataset of several thousand speaking errors (my own) from the last two years.

Roadmap

Better speech scoring, more shadowing variants to reduce monotony (without gamification), difficulty calibration from error history, and clustering similar errors so one fix generalizes to many cards.

Code / discussion

Source is public. If you're building something related, I'd like to compare notes.

https://github.com/rickcarlino/koalacards

Research

I've compiled a list of research findings about input flooding.